无路是线上还是线下的集群,都是有很多的持久存储需求,比如为了提高CI效率就会存储一些构建环境数据。虽然可以动过手动挂载或者手动创建PV和PVC方式来实现,但是都没有动态卷来得方便。kubernetes支持的动态卷存储有很多,比如之前提到的GlusterFS,Ceph。由于分布式存储比较复杂,所以我的线下集群选择用NFS的动态卷。

下面就来看看如何在kubernetes中实现NFS的动态卷,并使用。后面也会用NFS的动态卷来存储Mysql,PostgreSQL数据库等。

后面我会介绍如何把代码质量平台Sonarqube也放到docker中并部署到kubernetes里,并数据存储到基于nfs动态卷的PostgreSQL数据库中

NFS服务器安装

需要安装nfs服务,具体可以参考KUBERNETES 存储 NFS 部署

RBAC

由于kubernetes开启了RBAC功能,首先需要创建rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: mysql

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: mysql

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

nfs-client-provisioner

创建服务

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

namespace: mysql

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: x.x.x.x

- name: NFS_PATH

value: /home/nfs/mariadb/mariadb00

volumes:

- name: nfs-client-root

nfs:

server: x.x.x.x

path: /home/nfs/mariadb/mariadb00

创建nfs storageclass class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

namespace: mysql

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

测试

创建test PVC

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

namespace: mysql

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

创建 test pod

kind: Pod

apiVersion: v1

metadata:

name: test-pod

namespace: mysql

spec:

containers:

- name: test-pod

image: gcr.io/google_containers/busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

mysql deployment中使用

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: mariadb

namespace: mysql

spec:

serviceName: "mariadb"

replicas: 1

template:

metadata:

labels:

app: mariadb

spec:

terminationGracePeriodSeconds: 10

containers:

- name: mariadb

image: mariadb:10.1.22@sha256:21afb9ab191aac8ced2e1490ad5ec6c0f1c5704810d73451dd124670bcacfb14

ports:

- containerPort: 3306

name: mysql

- containerPort: 4444

name: sst

- containerPort: 4567

name: replication

- containerPort: 4567

protocol: UDP

name: replicationudp

- containerPort: 4568

name: ist

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-secret

key: rootpw

- name: MYSQL_INITDB_SKIP_TZINFO

value: "yes"

args:

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

# Remove after first replicas=1 create

- --wsrep-new-cluster

volumeMounts:

- name: mysql

mountPath: /var/lib/mysql

- name: conf

mountPath: /etc/mysql/conf.d

- name: initdb

mountPath: /docker-entrypoint-initdb.d

volumes:

- name: conf

configMap:

name: conf-d

- name: initdb

emptyDir: {}

volumeClaimTemplates:

- metadata:

name: mysql

annotations:

volume.beta.kubernetes.io/storage-class: "nfs-storage"

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 30Gi

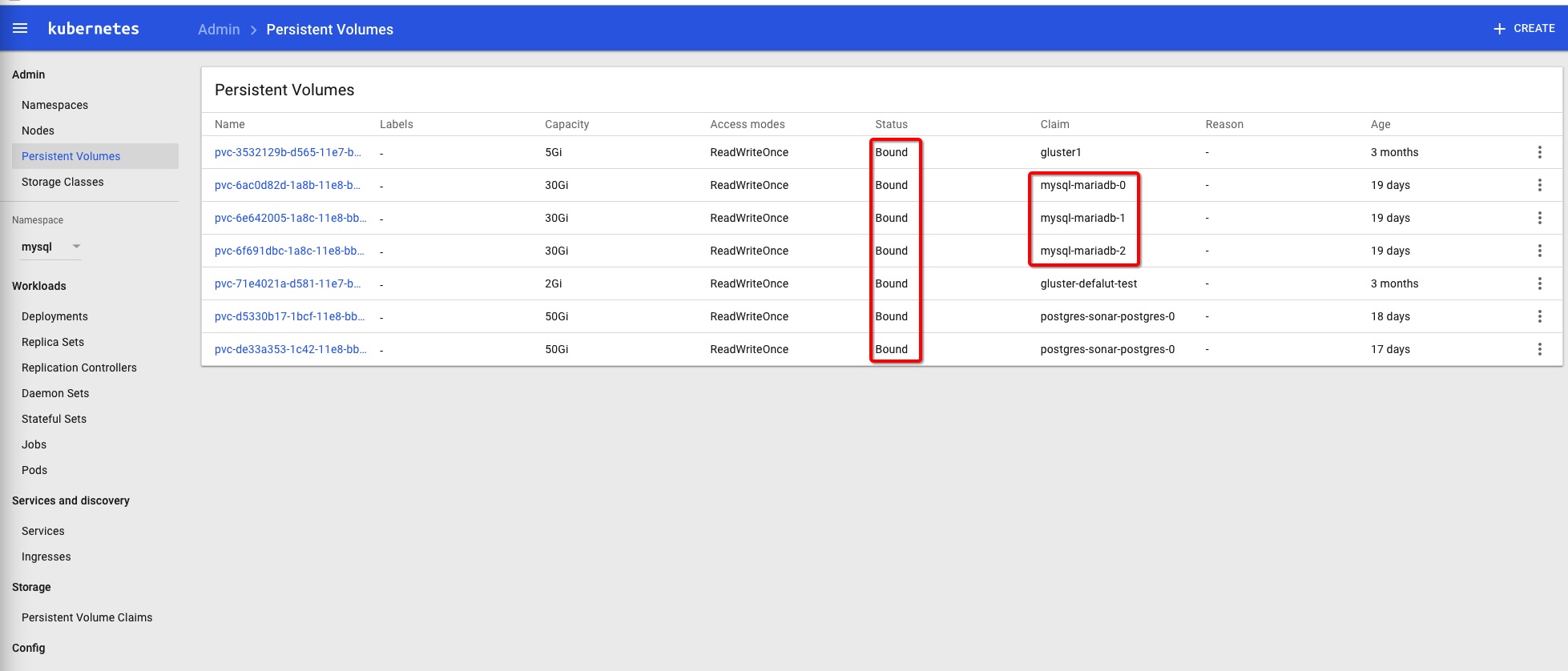

创建成功后,在kubernetes dashboard 页面可以看到自动创建的PV,并且状态是Bound,如下图。